Twitter today responded to calls to make it easier for people to report abusive messages received through its service, pledging to introduce a ‘report abuse’ button.

This follows a weekend of controversy for the platform as feminist campaigner Caroline Criado-Perez faced a deluge of hundreds of vile tweets, including threats to rape and kill her, after she successfully campaigned for a woman’s picture to be put on a new banknote.

Criado-Perez refused to be silenced and took to both traditional and digital media to name and shame those who’d made the threats. Twitter drew criticism from politicians on all sides. Shadow Home Secretary Yvette Cooper described their response as “weak” and “inadequate”, while the Police’s social media lead called for the company to make further changes to the platform to prevent abuse.

In the past three days, over 50,000 people have signed the petition calling for the introduction of a ‘report abuse’ button. These tens of thousands will no doubt be pleased to hear Twitter has heeded their demands, and included this functionality in the latest release of their iPhone app, with other apps and sites to follow.

But we should be careful what we wish for. A button will not, alone, rid Twitter (or the wider world) of mysogyny and abuse. These are complex issues that will take more than a button to resolve. But ‘report abuse’ buttons have been known to be widely abused on other networks, an introducing this to Twitter will create new and complex problems for individuals and brands online.

Abuse buttons are easily abused

Back in 2010 I wrote about the case of a magazine which disapppeared from Facebook after falling victim to misuse of the report button. They found their page – and the personal accounts of all the admins – disappeared overnight, with no recourse to appeal.

After writing that piece, I heard similar stories from social media specialists of pages shut down and valuable content lost through malicious reporting, commercial rivalry, or simply mischief-making. Community managers and social media managers have found disappearing content to be a depressingly regular occurrence. It only takes a handful of reports to have content removed automatically – putting campaigns and content at risk of malicious removal, and putting the personal accounts of the admins at risk of deletion.

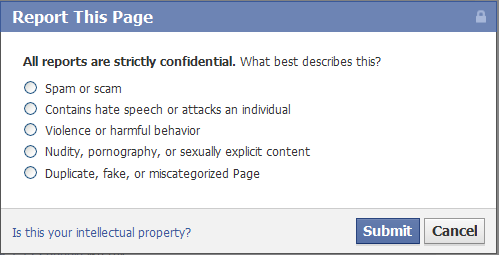

Facebook has long since made it simple to report different kinds of abuse, from breaches of terms of service to copyright violation, but provides no means by which brands and organisations can appeal when this is misused.

More recently Facebook introduced the concept of ‘protected accounts’, where pages are protected from automatic shut-down – but this isn’t a service they publicise, and is largely only available to paying advertisers.

Introduction of a similar mechanism on Twitter ironically creates a whole new means by which trolls can abuse those they disagree with. The report abuse button could be used to silence campaigners, like Criado-Perez, by taking advantage of the automatic blocking and account closure such a feature typically offers. In that way, it could end up putting greater power in the trolls’ hands.

A report feature could also be used by campaign groups to ‘bring down’ brands or high-profile individuals (such as MPs) through co-ordinated mass reporting.

The abuse button will do little to prevent abusive messages

It’s not at all clear that an abuse button will do much to prevent the use of abusive and threatening language on Twitter, either.

Unlike Facebook – which these days makes it quite difficult to register a new account, and in storing so much of your life history creates implicit incentives toward good behaviour as users truly fear having accounts deleted – the barriers to entry on Twitter are low. All you need to create a Twitter account is an email address; you can be up and running in under a minute. If users are blocked or banned for abuse, they can – and will – simply create new IDs and keep on going.

The introduction of a report button could simply create a tedious game of cat and mouse in which the immature and misogynistic simply treat being reported and banned as a wind-up to be ignored.

Button-pushing mechanisms rarely create real change

To create real change, and really tackle the issue of abuse on Twitter (and indeed, mysogyny in the wider world) we have to recognise it’s s complex problem which can’t be resolved by giving people a button to press and make it go away.

Abuse is sometimes clear-cut, but often it’s subjective. What someone may regard as a joke or sarcasm, others could see as abuse and threatening language – as the Twitter Joke Trial proved all too well.

Threats of violence and rape are, rightly, against the law (the Malicious Communications Act 2003 outlaws electronic communications which are “grossly offensive” or threatening). Writing for the Guardian, feminist writer Jane Rae argues more could be achieved by applying these existing laws.

It’s encouraging to see the UK police have already made one arrest over the threats against Criado-Perez, because seeing people being prosecuted for what is a serious crime sends a far stronger message to trolls than having their Twitter account blocked. I, for one, hope the police take action against more of those who have threatened violence.

A report button is an ineffectual knee-jerk response to the issue. But that it’s been introduced in a hurry – leaving no time to ensure it’s properly thought through, resourced, or supported by processes created through discussion with law enforcement agencies – means this is a move that’s likely to do little to tackle abuse on Twitter, but rather create new ways for people and brands to be abused.

UPDATE: Several bloggers have expressed reservations about this too, thinking more about some of the problems with trying to automate the process of identifying and tackling abuse. Here are some posts worth reading:

- Martin Belam: This isn’t a technology problem. This is a misogyny problem.

- Tom Phillips: Twitter abuse and magic wands

- Mary Hamilton: Twitter’s freedom of speech

- PME2013: Trolls and the Twitter boycott

- Brooke Magnanti: Feminists boycotting Twitter is not the way to end trolling (Telegraph online)

Pingback: A ‘report abuse’ button on Twitter will create more problems than it solves – Sharon O’Dea | Public Sector Blogs

I’m not into Twitter trolling myself, but if I was, here’s what I’d do:

1) Create a fake account using Tor for anonymity

2) Use fake account until banned

3. Create new account

What part of that process would be stopped by a report abuse button?

And this is from someone who’d not thought about it until yesterday. For someone who does this regularly for fun, what other elaborate methods are they using that I haven’t thought of?

perhaps requiring a small refundable deposit when you sign up for twitter would discourage those who would serially offend and keep setting up new accounts to propagate abuse if they are banned from an old one. Obviously you would lose this deposit if banned – but it could be returned if you closed your account normally.

Aside from the disincentive to keep setting up new accounts, it would also provide a slightly stronger form of audit trail over identity. It does however require the right of appeal, checked by a human.

Facebook actually do this very well. If you want to sign up for a new account, you have to prove your identity, giving them a phone number to verify. Nearly everyone only has one phone numnber, and on top of that they often ask for proof of identity to be scanned and emailed. As such, there are remarkably few fake and multiple accounts on Facebook, and being banned is a big deal.

The same isn’t the case on Twitter. Lots of us (myself included) run multiple accounts, for entirely legitimate reasons. But as a result, a report button – leading to suspension or banning – is little deterrent when, as John points out above, opening a new account in order to keep on trolling can be done within seconds.

No one is claiming that a ‘report abuse button is anything other than a small step to help reduce online bullying, it isn’t being suggested as a wonder cure.

The problems you have highlighted are not problems of a “report abuse” button, but of a badly designed ‘report abuse’ button and background system. As you have pointed out there are things that can be done in order to maintain a useful means of simply reporting abuse that is less prone to being used mischievously. Perhaps we could feed helpful comments to Twitter while waiting to see what they produce before declaring it a failure.

I’m not sure it is possible to design a system robust enough to identify and deal with genuine abuse, while not opening the system up to abuse of the abuse button, though. Look at YouTube; it’s had a report abuse feature of years, and it’s done little or nothing to stem the tide of utterly vile misogynistic comments you see below most of their videos. Given the sheer volume of content and reports, the only advice YouTube can really offer is “switch off comments”. With 500m tweets sent every day, it will never be possible to offer a significant level of human intervention to review reports and deal with appeals, so the system will have to be an almost entirely automated one – which experience on other platforms has proved is deeply flawed.

Thanks for the reply Sharon, I was concerned I was coming across a bit aggressive myself there in my comment, I certainly apologise if it felt that way.

The type of things I was thinking about that might be useful to limit abuse include:

-some kind of action eg suspension/account closure to those who abuse the button,

-minimum duration of Twitter account before having access to the report abuse button (to stop a mischievous person setting up a new account after abusing the button),

-flagging up a case for review if numerous reports originate from the same IP address,

I totally agree with you that a fully robust system is limited by the availability of human intervention, but I’m not quite willing to write off the people that work at twitter who I’m sure are smarter than me and can think up better, workable safeguards than mine.

Again, sorry if I came across a bit aggressive earlier. Wasn’t meant at all.

I didn’t take it as aggressive at all! But as with all web communications, our readings of it can be very subjective. One man’s joke is another man’s offensive remark. The best objective standard we have is the legal test of hate speech, and that’s what should be used when judging online abuse, which is why I think it’s better tackled by existing legal safeguards than attempting to create simple technological solutions.

Those are all good ideas, but again all have possible drawbacks. Account suspension is widely used by Facebook – and thus widely abused by trolls and the disgruntled to take brand and organisation pages offline. Suspension or closure needs a means of appeal, which requires human intervention. If trolls know they can take a Twitter account offline by reporting it from three different accounts, they can do that to silence anyone.

Minimum times before reporting are flawed too. Trolls (of the old-school, winding-people-up-on-forums variety) keep a stock of usernames in their back pocket for when they’re inevitably banned.

As a service used primarily on mobile, users can change IP several times a day. And again, when we say ‘flagged for review’ that means review by humans. I’m not saying that Twitter can’t contribute anything to tackling this, but rather that it is naive to think a simple technological solution can solve a problem as complex as trolling.

Online communities usually operate with (volunteer) moderators to manage this sort of thing. They could hide a posting and refer it upwards, temporarily suspend a users Tweeting rights, etc.

Good points, but worth noting that the “report this tweet” button was introduced to the iOS app a couple of weeks ago in an update. It isn’t a response to this issue, or to the petition – The Telegraph, I think, got the chronology telescoped.

Thanks for clarifying. I’ve just checked and the button has indeed been available (via the iPhone app, anyway) for a short while. But that 75,000 so far have signed an online petition calling for the introduction of something that already existed as “something must be done” is a stunning example of knee-jerk slacktivism. If people will sign a petition that easily, how can we be confident that a report button will be used in a considered manner?

It also begs a number of questions. If the report button already existed, why did no one use it in this case? Or is it the case that they did, but it didn’t make a difference?

(oops – sorry, double post!)

I think there are a few things to note about that. One is that the update was announced “below the fold” – i.e. you would have to press “more” to find out about it even if you read your iOS update log. So, it depends on people knowing that it exists, which is problem one.

The next is that even if you were aware of its existence you’d have to be conducting the debate on the mobile web or an iOS device to have easy access to it. I don’t have the stats to hand, but a lot of people still primarily use either http://www.twitter.com or tweetdeck for most of their tweeting – especially if they are doing something typing-heavy. Of course, at that point they have the power to use the web-based reporting system, but again that is not well-promoted, and if you are getting 50 threats an hour reporting them would probably take… about an hour.

The third is that pressing the “report” button ultimately flows you into the (web-based) reporting function – so, it culminates in needing to fill out a form, which is pretty bad UX for a mobile user.

And the fourth is probably that confidence in the reporting process is pretty low. Anita Sarkeesian noted that Twitter responded to her reporting a tweet saying “I will rape you when I get the chance” by saying that this post did not contravene their guidelines.

So, lack of promotion, lack of universality, lack of UX/UI integration and lack of confidence, essentially, are reasons why it might not have been used.

(I wrote a little more on this at http://www.forbes.com/sites/danielnyegriffiths/2013/07/30/twitter-abuse-criado-perez-creasy/ )

An excellent piece, thank you. I agree completely that Twitter sorely needs a sign-up 2.0 .

Also, how about if the report abuse could only operate on inidvidual tweets directly received as @tweets ? Then if the abused never tweets the abuser, the abuser would have nothing to act on, no?

As no system is ever perfect the current very imperfect system must surely evolve. I feel an improved report abuse iteration and very soon will mostly help, and also show that the majority are happy, or won’t mind, if the current abuse ‘free-for-all’ gets a little harder to get away with.

PS I’d signed the petition!

I saw this “straw man” solution. What do you think?

http://www.chyp.com/media/blog-entry/anonymity-privilege-or-right

You are right that a report abuse button is problematic. I propose a panic button. I’d love to hear what you think. Thanks.

http://flay.jellybee.co.uk/2013/07/panic-mode-my-proposal-to-curb-twitter.html

Pingback: Jane Austen and the Rape-Threatening Men | man boobz

Pingback: Twiiter's Response to Trolling Was Poorly Thought Out | BrandAlert

Pingback: Twitter trolls and this week’s bits and bytes | Thomas Knorpp

Pingback: In praise of web anonymity | Sharon O'Dea

Pingback: Twitter updates its rules on abuse | Acttu news magazine

Pingback: Link roundup | Kind of Digital

Pingback: Solving Twitter abuse isn’t about a report button; it’s about educating users with a clearer sign up journey « eight